Category: Web

-

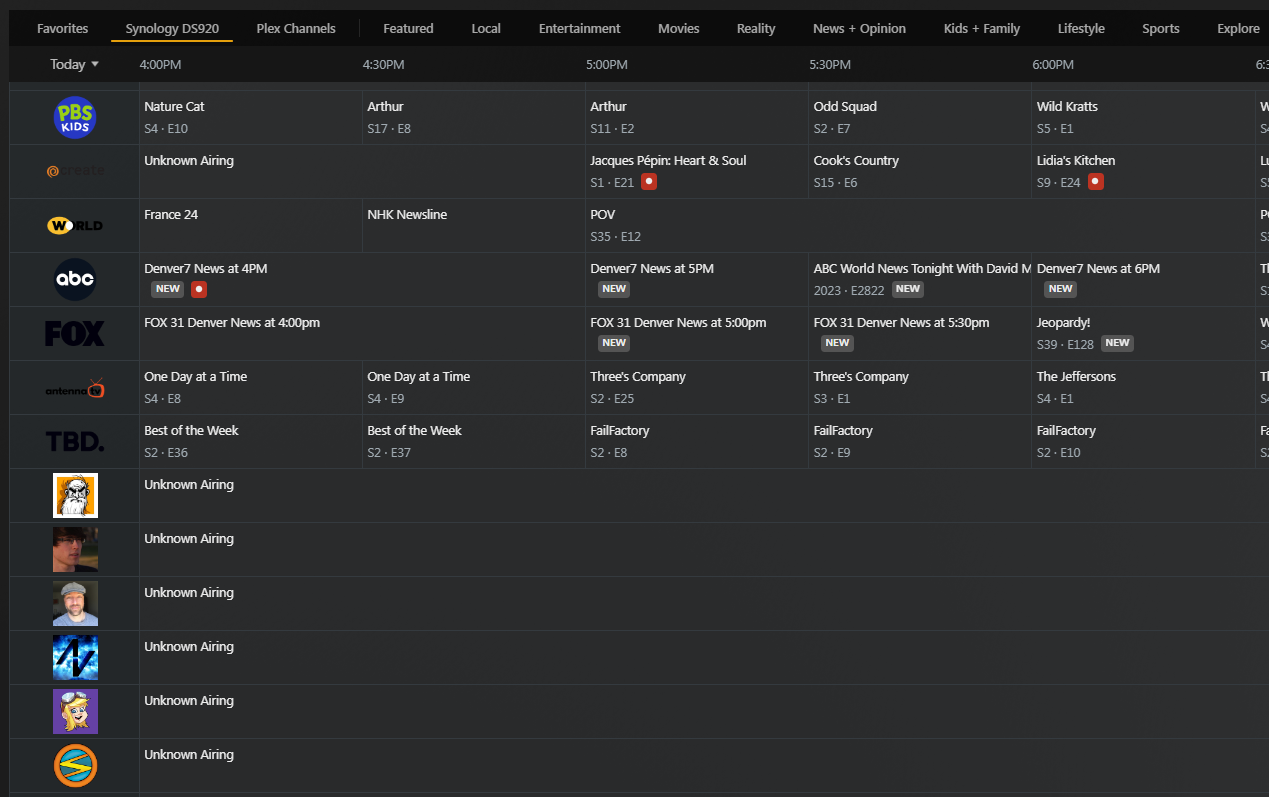

Live TV Tuners, Twitch, Plex, and screwing over even more Subscription Services

I have a lifetime subscription to Plex. I’ve had it for at least eight years. It’s cool! It lets me watch IPTV (built-in) and even has the option to connect to digital tuner+antenna combos on my network. I mean, I saw this “HDHomeRun” device I could buy on Amazon a couple of years ago and…

-

Plex, Synology, Xfinity and Me

So if your router doesn’t have access to this DNS Rebind Protection business, but you’re still seeing the “Unable to claim” error and you’ve tried everything, see about manually setting the DNS configuration of your actual server machine; not just hoping it will use the ones in your modem or router like it’s supposed to.

-

The Reddit Thing

Yesterday I got a notification from Youtube stating that COPPA/Made for Kids was being forced come January. I’ve largely ignored these messages, as my channel is not that big, not very expansive, and not terribly interesting. However, when these settings apply to everyone, and I am a member of everyone, well, maybe it’d be worth…

-

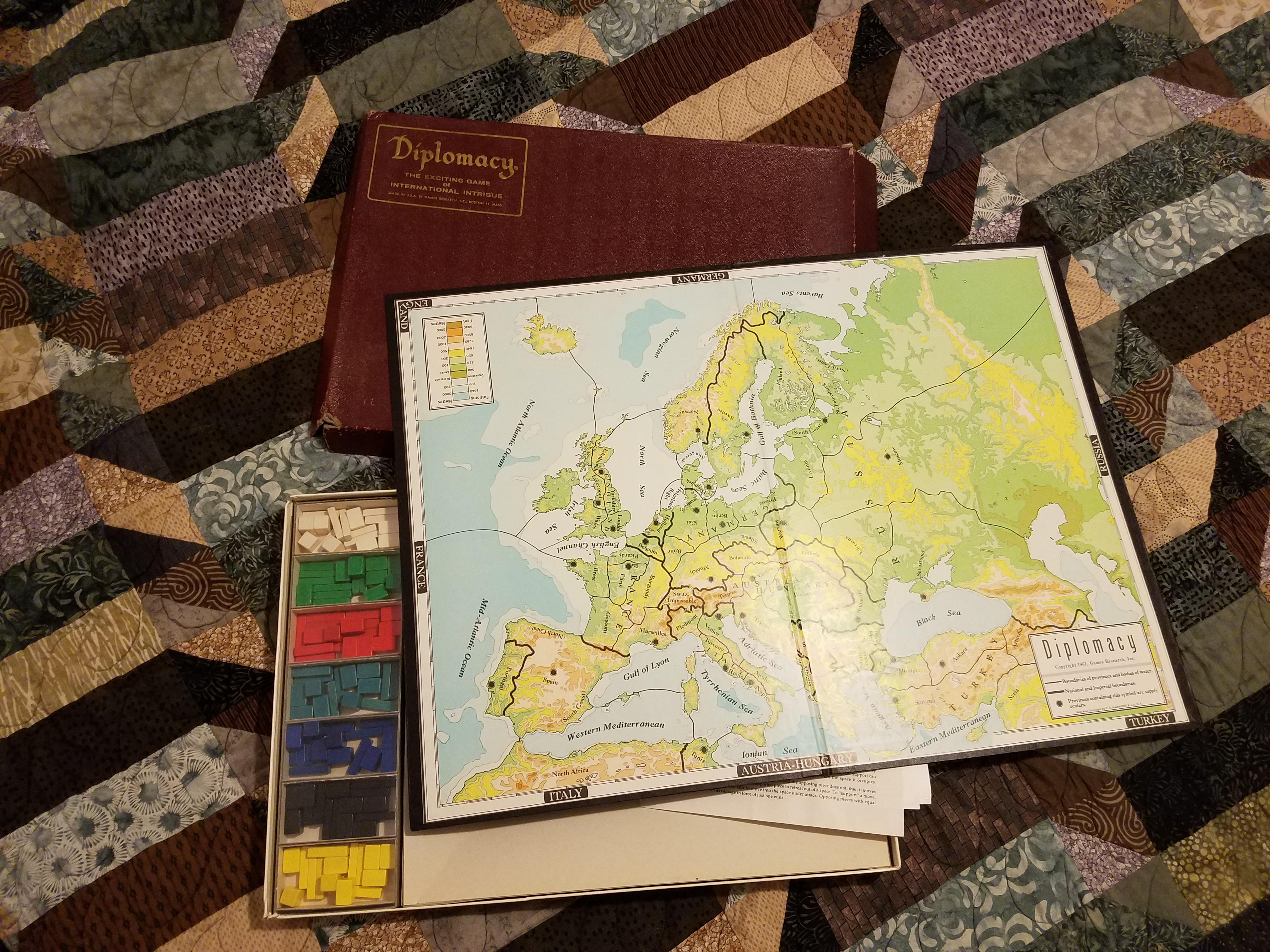

Diplomacy

I just finished listening to NoDumbQuestions‘ Episode 53 – What Would Happen Every Time You Restarted Earth? I have to say, the discussion definitely got me onto two things which I briefly mentioned in my comment on Reddit (I don’t know if my thoughts will take off at this point, but I wanted to put…

-

Christmas Again

So I guess I could say Merry Christmas. Then again, I WANT to say, “Hey it’s that time of year where I break out my Christmas theme for a blog!” I haven’t done this in a long while, and given that I’ve moved over to WordPress, I wonder if it’s possible. Stay tuned for theme-like…

-

Super Duper Status Update

It works! I was a bit annoyed that the wordpress atom feed was only XML based. However, I do consider myself pretty good at googling things, and so I found this PHP library called “SimpleXML” which solved a LOT of stuff for me. I used to display the first five Blogger titles on the homepage in…

-

Blogger Migration

So you may be wondering: what’s up with all these blog redesigns, Daniel? Well, I got sick of Blogger. A whole lot. And about a month ago, I decided to do something about it.

-

Manual letsEncrypt for CPanel

Jump to Renewal Instructions Additionally, EFF has deprecated use of aptitude/yum/dnf/etc package managers for deploying Certbot on Debian-based systems. Instead, they recommend use of Snap. At work I recently collaborated with our hosting provider to move our company website to a version of cPanel. Up until this time, there has been no way of running our site on…

-

A Fun Adventure in PGP

So I got curious about PGP keys and signing and encrypting using them. I managed to figure out how to use the semi-popular gpg4win (the standard windows port of GnuPG) with its built in Kleopatra GUI, Outlook add-ins and all the other fun stuff.

-

PHP Access Control List

A quick little Access Control List (ACL) snippet I made for PHP/HTML. Enjoy! <?php $acl = array( // Populate with IP/Subnet Mask pairs. // Any zero bit in the subnet mask acts as a wildcard in the IP address check. array(“192.168.10.24″,”255.255.255.255”), ); $acl_allow = false; for ($i = 0; $i < count($acl); $i++) { $ip2chk…